When I look at the nuclear power industry in the US today, I can't help being reminded of a football offense that can’t gain yardage, whatever it tries.

So many obstacles. And not a few muffed plays.

Every time it looks like nuclear might rally, something happens to shut that rally down.

US nuclear last got on the scoreboard with Vogtle Unit 4, which started putting power on the Georgia grid at the end of April.

But a long scoring drought looms in the future.

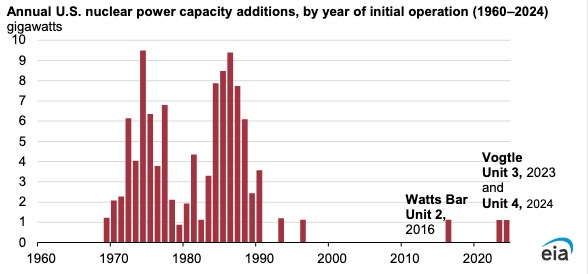

Exactly zero nuclear reactors are under construction in US right now. A simple visual tells the tale:

Much ink has been spilled, including my own, on the lessons of Vogtle.

Did the cost overruns prove that large nuclear is no long possible in the US?

Or, on the contrary, did we learn enough from Vogtle that now is, in fact, an opportune moment for someone to try building another AP1000? And get it right this time?

If that last is true, there's some mild urgency. The supply chain rebuilt for Vogtle, at so much pain and cost, will not keep forever.

While waiting for someone to step up and take a big swing at another Vogtle, my sports advice to the US nuclear industry is: forget home runs.

Try to hit some singles.

My modest proposal is that the US nuclear industry should focus on building micro-ish reactors for data centers — and fugget about the public power grid.

I’ll take the second point first.

The grid is a mess.

And it’s not about to be fixed anytime soon.

Nor do many of the current proposals to ‘fix the grid’ have much to do with actually fixing the grid.

The renewables lobby talking point about ‘fixing the grid’ is code. It would like to get tax- and rate-payers to bankroll the transmission their projects need.

Highly instructive recently was a $6.1 billion, 10-year transmission plan approved by CAISO, the California Independent System Operator, on 23 May 2024.

Only $1.5 billion of that is actually for grid reliability projects. The other $4.6 billion is to build transmission lines to offshore wind projects in Northern California.

A seismic shift relating to the grid took place in December 2023.

That’s when a consulting firm called GridStrategies came out with a presentation called “The Era of Flat Power Demand is Over”.

The report’s authors, John D. Wilson and Zach Zimmerman, studied the filings various utilities around the US are required to make with FERC, the Federal Energy Regulatory Commission (FERC).

In those submissions, the utilities are asked to forecast how much additional electricity their regions will need in the next 5 years.

The utility forecasts were shocking — on the upside.

For one example, Portland General Electric in Oregon doubled its forecast for new electricity demand over the next five years, citing data centers and “rapid industrial growth” as the drivers.

The GridStrategies presentation1 had three simple bullet points:

The nationwide forecast of electricity demand shot up from 2.6% to 4.7% growth over the next five years, as reflected in 2023 FERC filings.

Grid planners forecast peak demand growth of 38 gigawatts (GW) through 2028, requiring rapid planning and construction of new generation and transmission.

This is likely an underestimate.

In March, the New York Times and the Washington Post got around to writing stories based on the GridStrategies report.2

One might think that “rapid industrial growth” would be reported as good news.

Apparently not at the Times and the Post.

The subhead on Times story warned “A boom in data centers and factories is straining electric grids and propping up fossil fuels.”

The Post author worried that the increase in demand “threatens to stifle the transition to cleaner energy.”

Out of curiosity, I studied the headshots and capsule bios of the reporters, Brad Plumer (Times) and Evan Halper (Post).

It’s a safe bet that neither had been born the last time US electricity demand grew at over 5% per year.

That was in the 1960s.

In some years of that decade, it grew at 7% and 8%.

Since 2000, US electricity demand has grown, on average, less than 1% per year.

A lot of ‘us’ — depending on exactly how old ‘us’ are — haven’t seen growth demand like this in living memory.

Efficiency and conservation are great. They helped keep electricity demand down for years.

But a dirty secret of that success is that we were eating our assets.

Those were left to us by those naïve 1960s engineers who believed supply should exceed demand by a safety margin of 15%.

The best position to take vis-à-vis the grid is off it.

We are now living very close to the bone. There’s no more slack.

Which is why even California didn’t dare shut down a legacy nuclear plant like Diablo Canyon.

Thanks to our locust years — the decades of under-investing in reliables and over-investing in renewables — the blackouts are coming.

With mathematical certainty.

Depending on the weather.

In May, the New York Independent System Operator warned that three consecutive days this summer with temperatures above 95° would leave it short 1,400 megawatts.

That’s power for one million homes.

I won’t mention Indian Point. But since I just did, I’ve written about that, too.

My advice to the nuclear industry is: being public-spirited is a great and good thing.

But the grid doesn’t have to be your problem. Or your customer’s.

The best position to take vis-à-vis the grid is: off it.

Think Generac.

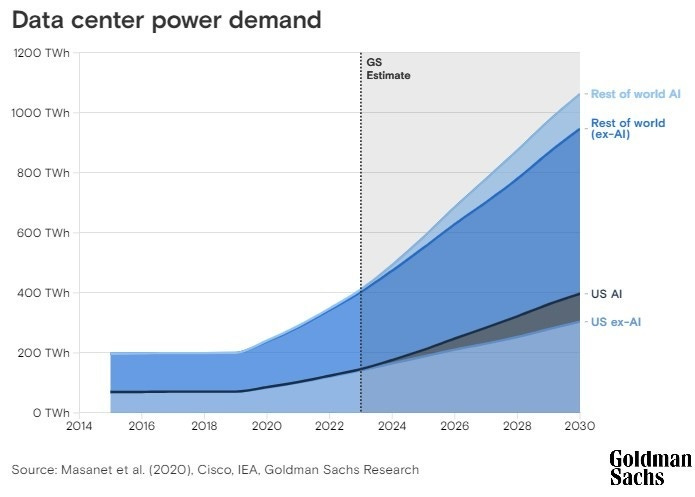

Estimates for future data center electricity demand vary widely.

Some, given all the recent hype about AI, are a bit suspect.

But all pretty much point in the same direction: straight up.

Morgan Stanley projects that power use from data centers “is expected to triple globally this year.”3

McKinsey sees power demand from IT equipment alone — excluding, importantly, the air conditioning — in US data centers more than doubling to 50 gigawatts by 2030, up from 21 GW in 2023.

The International Energy Agency (IEA) sees data center demand doubling worldwide as early as 2026.4

Aside: Sadly, we can no longer trust IEA numbers. The agency appears to have given in to climate alarmism.

I like Goldman’s chart5 best, since it splits the projection with and without AI:

In a functioning market economy, an increase in demand is — eventually — met by an increase in supply.

We don’t have that.

We have a Net Zero economy.

In former command-and-control economies, if you wanted a consumer item that differed from the one-size-fits-all style the apparatchiks had on offer, you had a problem.

The public grid can no longer offer electricity that is reliable.

If you want that, you are going to have to work for it.

To any budding Harvard MBA looking for a case study, I would recommend Switch Inc., a Las Vegas–headquartered data center company and provider of colocation, telecommunications, and cloud services.

Switch went off-grid in order to go solar.

Switch presently operates — or is the process of building, it’s a little hard to tell — 5 huge data centers located to cover the US by region.

Switch gives them cute names: there’s the “Core” in Las Vegas; the “Citadel” in Tahoe Reno, Nevada; the “Pyramid” in Grand Rapids, Mich.; the “Keep” in Atlanta; and “The Rock” coming soon to Austin, Texas.

Business history trivia aside: Switch’s original Las Vegas location was the site of Enron’s secretive broadband operation. The site was selected by Enron because it sat atop a strategic fiber optic cable hub and provided easy, not-very-regulated access to the California market. Switch’s Grand Rapids ‘Pyramid’ building was formerly the research headquarters of Michigan metal-bender Steelcase. Steelcase, unlike Enron, is still in business.

An obsession of Switch’s CEO Rob Roy has been to only use ‘100% green’ energy for his data centers.

That also makes for a ‘difference’ in the company’s sales pitch to the tech industry.

In 2016, Switch went through an acrimonious break-up with NV Energy, the Berkshire Hathaway–owned public utility in Nevada.

Roy wanted build his own solar farms to power Switch’s data centers. NV Energy was, at that point, in his way.

In the utility business, DIY is called going ‘behind-the-meter’.

Actually, if you’re entirely self-sufficient, there may be no meter. Or, if you sometimes generate more electricity than you need and want to sell the surplus to a utility, a two-way meter.

In any event, Switch apparently now has four large solar farms in Nevada, the one near Reno generating 127 MW.

One might well ask how Switch keeps its servers running at night.

The answer, apparently, is with a lot of Tesla Megapacks, purchased from Switch’s Reno neighbor, Elon Musk:

A single Megapack 2 XL is rated at 3.916 MWh and has a list price of $1.39 million.

The cloud computing giants are, with approximate market share, are: Amazon Web Services (AWS), 32%; Microsoft Azure, 23%; and Google Cloud Platform (GCP), 10%.

The tech giants, if not as obsessed as Switch’s Rob Roy, are plenty touchy about their carbon footprints.

And not without reason. Microsoft and Google consume more electricity than the nation of Slovenia:

In the 1500s, the Church sold indulgences to sinners.

Carbon offsets and power purchase agreements (PPA) are the indulgences of contemporary climatism.

As in Dante’s Purgatory, various levels of salvation are available.

At one level, only currently produced CO₂ is offset.

A higher level, which Google says it has attained, comes by offsetting all emissions ever since the company’s founding.

Nature-based offsets involve doing good deeds such as planting trees or legally protecting a nature preserve, often in remote parts of the world.

PPAs involve a different calculus of sin.

If you have no choice other than to consume dirty electricity yourself, you can pay for someone to generate an equal amount of clean electricity. They add it to the common pool.

The complexity of the natural carbon cycle and the intermittency of renewables has provided full employment for carbon accountants, who presumably wear green eyeshades.

A certain amount creativity, or eyewash or greenwash, is called for in carbon offset accounting.

Trees take several decades to grow, and can burn down. Are they offsetting emissions made today?

The well-known gaps in renewable generation, which typically must be filled in by natural gas generation, give carbon accountants headaches.

A currently fashionable, if yet-more-complicated, idea is to do the accounting on a ‘24/7’ basis, by time of day.

Like I said. Full employment.

Nuclear would appear to be a self-evident solution to the data center electricity problem.

Technically.

Nuclear offers steady power with no carbon emissions. And reactors have more than enough capacity for the job.

Maybe too much, as I will argue below.

Data centers come in a number sizes, from ‘micro’ to ‘hyperscale’.

An ‘average’ data center is defined as having between 2,000 and 5,000 servers; footage between 20,000 and 100,000 square feet; and a power draw around 100 megawatts.

‘Hyperscale’ is a now-ambiguous term used to refer to anything larger.

Sometimes a lot larger. A data center run by China Telecom in Inner Mongolia is 10.7 million square feet, including living quarters.

Air conditioning often accounts for half the power budget on a data center. Some have been sited near the Arctic Circle, so they can just open the windows.

The target for uptime is called the ‘five nines’ — 99.999% of the time. This makes redundant power sources and standby generators a must.

The first problem with using nuclear to run a data center is, where to get it?

A tip of the hat to Dan Yurman of Neutron Bytes for some of this.

One idea is to put your data center next to an existing nuclear plant.

In March, Amazon Web Services (AWS) bought an existing data center from Houston–based Talen Energy.

Talen is the majority owner and operator of the Susquehanna Steam Electric Station in Salem Township, Pennsylvania. The data center, originally built for crypto mining, is next door:

The data center can use up to 960 megawatts. Susquehanna can generate 2.5 GW.

Apple operates a large data center in Mesa, Arizona.

Apple proudly points out that the company bankrolled a 50-megawatt, 300-acre solar farm in nearby Florence, as a sort of penance for powering Mesa off the public grid.

Apple, as a wholesaler, sells that solar to Salt River Project, a state of Arizona agency that is one of two utilities in the Phoenix area.

Mesa is just a stone’s throw away from the Palo Verde Nuclear Generating Station, of which Salt River is a part owner.

Palo Verde already sells a surprising amount of electricity into California. It would be no surprise if Apple Mesa is part-nuclear powered.

In 2020, Microsoft made an ambitious pledge, highly lauded at the time by media outlets like Bloomberg Green, to remove more carbon than it emits by 2030.

We’ve recently seen what I call the Microsoft Mea Culpa story: “Microsoft’s AI Push Imperils Climate Goal as Carbon Emissions Jump 30%", to use Bloomberg’s headline.6

Microsoft has made two recent ‘big hires’ out of the nuclear industry.

Microsoft-watchers conclude the company is building out a data center nuclear power team.

One of the strangest Microsoft rumors going around is that it and OpenAI are considering building a 5 GW 'Stargate' AI data center.

Five gigawatts would require four Vogtle-size reactors.

Speaking of Vogtle, that’s another obvious site for a data center.

Back in the real world, last year Microsoft signed a power purchase agreement with nuclear power producer Constellation Energy, mentioning a Microsoft data center in Boydton, Virginia.

That deal, however, is a PPA ‘offset’. Nuclear electricity generated by Constellation will not actually flow to Boydton.

But Northern Virginia, given its proximity to DC, may be the future battleground — or ground zero — of data center nuclear. Loudoun County is know as “Data Center Alley.”

Dominion Energy Virginia is the utility. Since 2019 Dominion has connected 75 data centers with 3 GW of capacity. In a Securities Exchange Commission (SEC) filing for 2023, Dominion said 21% of its electricity sales were to data centers.

In May, Bloomberg reported that data center developers are asking Dominion for a lot more power — one or two Vogtles worth.

The sports fan lives in hope. Maybe next year…

Small modular reactors, SMRs, are the perennial hope of the nuclear industry.

We can add Clinch River, where the Tennessee Valley Authority (TVA) has plans to build two GE-Hitachi BWRX-300 SMRs, as a potential site for nuclear-powered data centers.

I calculate the nominal size of a purpose-built data-center-nuclear-widget as smaller than a 300-megawatt SMR, but larger than a single 30 MW ‘micro’ reactor.

So maybe several of those.

Small reactors dedicated to data centers would be a niche market, to be sure.

But niche markets have been extraordinarily important in keeping technologies alive during troubled times. Photovoltaic solar would have died in infancy had not it been for satellites and specialty uses at remote sites.

Most important, the data center market may just be big enough to kick off a virtuous cycle of competitive innovation and cost-reduction, something the lethargic US nuclear industry desperately needs.

If, of course, the US Nuclear Regulatory Commission, the NRC, allows.

Like I said. The sports fan lives in hope.

Ironically, an AI is now taking on NRC paperwork.

The NRC has over 2 million documents, 52 million pages, in its ADAMS database.

It can be searched by keyword. Good luck with that.

A start-up called Atomic Canyon recently made an arrangement with the Oak Ridge National Lab’s Computing Facility in which it will attempt to graft a Google AI–type front end onto ADAMS to answer nuclear questions.

The project has no shortage of horsepower. Oak Ridge has what was, until recently, was the world’s fastest supercomputer, a Hewlett Packard Frontier OLCF-5.

Aside: The fiction writer in me is eager to see what happens when that AI starts hallucinating…

SMRs appear to be duplicating, disappointingly, the cost overrun problems of their larger brethren.

There’s understandable pressure to scale them up. If you must carry the regulatory burden and first-of-a-kind (FOAK) costs up front, you’re tempted to go for more bang for those bucks.

NuScale, for example, pretty late in the game upped the size of its 50 MW reactor to 77 MW.

NuScale, by the way, last year announced a deal with blockchain firm Standard Power for 24 SMRs two US sites.

For a dedicated data-center reactor, I’d suggest heading in the opposite direction.

Scale down.

The recently revved-up Oklo, Inc., whose stock starting trading in May, seems to get this.

As it should, since its chairman is OpenAI co-founder Sam Altman.

I’m not applying for the job, but recent posting by Oklo for a “Director of Data Center Solutions” is makes for interesting reading:

The Director of Data Center Solutions is responsible for developing and implementing our data center market strategy. Oklo’s advanced fission power plants offer data centers a path to energy independence—one that avoids bottlenecks in the grid and competition for energy with local communities. Our 24/7 clean energy solution can provide the power to unlock the benefits of AI and cloud computing. As part of an entrepreneurial team in this rapidly growing business area, you will play a key role in understanding the needs of our data center customers and help them access clean, reliable, affordable energy.

Oklo's flagship product is called the Aurora powerhouse, designed to produce 15 megawatts of electricity.

It’s a somewhat different reactor design, using fast neutrons and high-assay, low-enriched uranium (HALEU) fuel.

Oklo has yet to get NRC approval for its Aurora design. Its 2022 submission to the NRC was handed back to Oklo in a somewhat embarrassing, dog-ate-my-homework exchange.

Despite that, Oklo is moving fast.

In April, it announced a ‘pre-agreement’ — which I think means letter of intent — with Equinox, a very large data center and colocation provider.

If Oklo starts getting its Aurora powerhouses built, Equinox will buy either electricity or PPAs for its data centers. The deal term is 20 years.

On 23 May, Oklo announced it had signed a non-binding letter of intent to supply a company called Wyoming Hyperscale with 100 megawatts of power for a state-of-the-art data center campus.

Wyoming Hyperscale touts itself — Rob Roy at Switch take notice — as ‘Ultra-Green’. Waste heat from the servers, the company says, is used to grow vegetables in an indoor farm.

No story mentioning AI would be complete without some of the scary stuff.

I’m not worried about some AI taking over the world.

The scary stuff is sociopolitical.

If the demand projections are half right, electricity is going to be a scarce resource.

People fight over those.

We can already detect a dog-in-the-manger subtext in media stories about ‘power hungry AI’.

The AIs are going to take ‘my’ power.

Or, more plausibly, outbid me for it.

It’s a specter of humans living in AI apartheid.

So maybe ‘we’ should outlaw AI, while we still can watch Netflix (Amazon Web Services) and charge our iPhones.

Dirigisme — ‘state control of economic and social matters’ — is a slippery slope.

In the EU, where dirigisme gets more traction than here in Texas, we already see a ‘virtue scale’ being created to pass judgement on large electricity users.

The European Energy Efficiency Directive (EEED) requires data centers to report their energy consumption, power utilization, water usage, waste heat utilization, use of renewables, and so on, starting 15 September 2024.

I’m not entirely clear where virtue comes into electricity use.

But, for example, there are people who feel that bitcoin mining has NRSV — No Redeeming Social Value.

Which was the name of a hardcore punk band in the 1980s.

That doesn’t mean I think the state has a right to ban it.

The New York Times may think that. Its headline last year was “Bitcoin mines cash in on electricity — by devouring it, selling it, even turning it off — and they cause immense pollution. In many cases, the public pays a price.”

In Texas, a target got put on the back of bitcoin mining last summer, during ERCOT’s power outage scares.

Most of the bitcoin miners suspended operation voluntarily. Blackout was avoided.

But some miners were controversially compensated by ERCOT on the legal ground that they had contracted for the power and lost revenue going without it.

It’s a technical peculiarity of bitcoin mining is that it can suspend and restart with relatively little pain.

That’s not true with data centers. Shutting them down would be a liability lawyer’s dream come true.

Last summer, the free-market ideals of most Texas legislators managed to weather the storm of the bitcoin fracas.

But they were tested.

In Navarro County, for example, 1,200 residents signed a petition demanding — somebody — shutdown a local bitcoin mine.

The pitchforks were out.

A key line from that petition was: “We do NOT want this enormous burden on our already fragile infrastructure.”

One has to ask: Was the problem the bitcoin mine?

Or that the infrastructure was allowed to get so fragile?

We’re in for a bumpy ride.

The Post story appeared first: “Amid explosive demand, America is running out of power” by Evan Halper, March 7, 2024. Link. A NY Times piece, with an animated graphic, followed a week later: “A New Surge in Power Use Is Threatening U.S. Climate Goals”, By Brad Plumer and Nadja Popovich, March 14, 2024. Link.

For Morgan Stanley, see a report called “Powering the AI Revolution”, March 8, 2024. Link. McKinsey & Company's multi-subject "Global Energy Perspective 2023" was published January 16, 2024. Link. McKinsey’s estimate on IT use is quoted from Reuters, “US electric utilities brace for surge in power demand from data centers”, by Laila Kearney and others, April 10, 2024. Link.

Goldman Sachs, "AI is poised to drive 160% increase in data center power demand", 14 May 2024. Link.

"Microsoft’s AI Push Imperils Climate Goal as Carbon Emissions Jump 30%", Akshat Rathi and Dina Bass, Bloomberg, May 15, 2024. Listen also to their interview with Microsoft vice chair and president Brad Smith on Rathi’s Zero podcast.